Note:

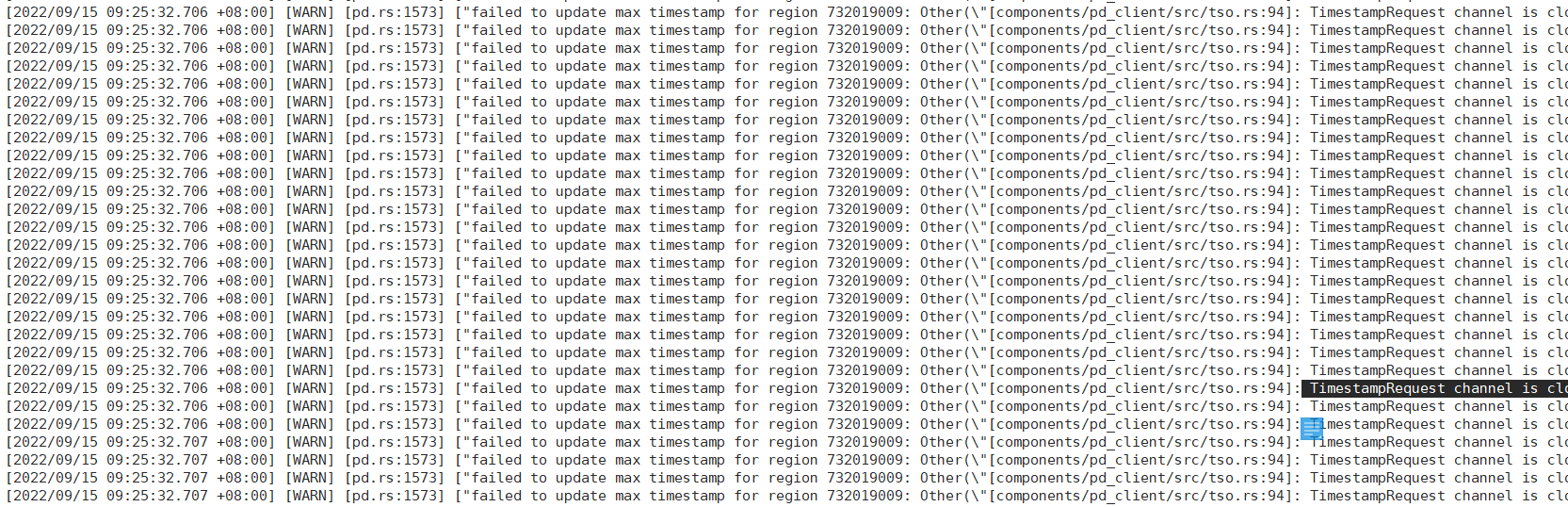

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: tikv报错ailed to update max timestamp for region 732019009: Other("[components/pd_client/src/tso.rs:94]: TimestampRequest channel is closed"

Hello~ Have you checked if the PD status is normal? Both errors are pointing to a possible anomaly in the PD cluster. You can check the PD leader’s logs and network for any abnormalities and troubleshoot from there.

How to use a command to designate one of them as the PD leader if there is an issue with PD?

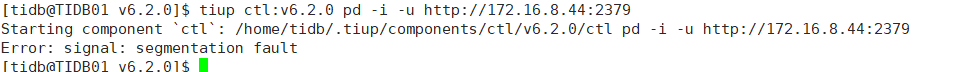

I later upgraded to version 6.2.0, but there are still issues.

Check the tiup cluster display, can you explain the upgrade process?

Can we confirm which PD member node has the issue? Or is the entire PD having problems? If it’s a specific PD node with an issue, you can try taking the problematic node offline to see if the cluster can function normally.

Is the reason for this that the PD leader changed during the upgrade?

Check if there are any errors or warning messages in the PD logs.

Also, review the log information during the upgrade.

After upgrading to v6.1.1 using tiup cluster upgrade tidb-kp-pms v6.1.1, there were issues with PD. I then used tiup cluster upgrade tidb-kp-pms v6.2.0 --offline to upgrade, but the issues still persist.

It kept reporting errors, so I deleted it. Now, I only have the current logs.

If there is an issue with PD, how can we designate one as the PD leader?

In this situation, there won’t be a split-brain issue, but a leader cannot be elected.