Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tikv启动失败,nofile数量太少,配置后不生效

[TiDB Usage Environment] Production Environment / Testing / Poc

[TiDB Version] v6.4.0

[Reproduction Path] Operations performed that led to the issue

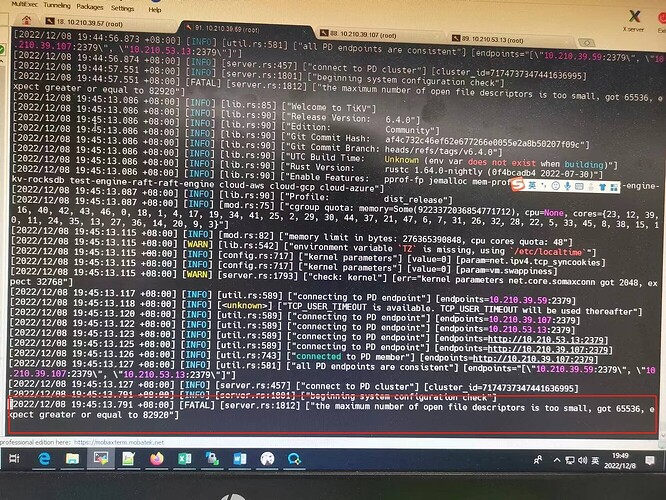

[Encountered Issue: Issue Phenomenon and Impact] tikv failed to start

[Resource Configuration]

[Attachments: Screenshots/Logs/Monitoring]

After configuring, try restarting the system?

How did you install the cluster? Didn’t you use tiup?

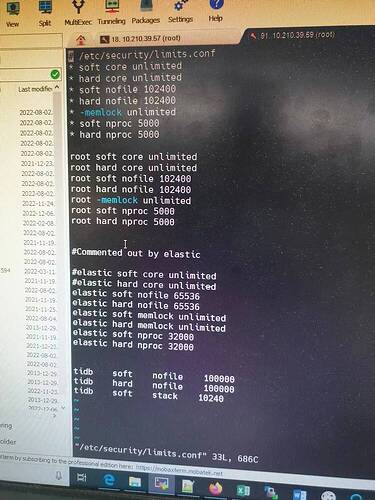

Check if this file has the following configuration?

session required pam_limits.so

To make the limits.conf file configuration take effect, you must ensure that the pam_limits.so file is added to the startup file.

Check the /etc/pam.d/login file for the following line (add it if it’s not there):

session required /lib64/security/pam_limits.so

Note:

Many people set up limits.conf without testing, and later find that it doesn’t take effect.

The main reasons are:

For 32-bit systems:

session required /lib/security/pam_limits.so

For 64-bit systems:

session required /lib64/security/pam_limits.so

Directly use the command ulimit -a with the tidb user to check if the nofile parameter has been set successfully.

It was installed using tiup.

Is there only this TiKV service instance on this node? Or are there other services as well?

I’m quite puzzled as to why the number of file handles needs to be so high…

65536 is already a lot, but the logs indicate it needs 82920…

It has taken effect. The output of ulimit -n shows 100000.

I’m not sure, isn’t 82920 the number of handles required by TiKV? For example, Elasticsearch requires more than 65535 handles, so I thought TiKV would be the same.

When installing with tiup, it will help you optimize these parameters. It also has a self-check function, and if the parameters are not properly adjusted, you won’t be able to proceed to the next step…

I suggest you first check the environment of this node. If you don’t understand this, there’s nothing more that can be done.

You can first execute tiup cluster check to check for potential risks in the cluster.

Tried it, none of them worked.

I have checked, and everything passed except for the CPU mode. Additionally, I adjusted the nofile value for TiDB, which was automatically set to 1,000,000. I changed it to 100,000, which should be fine, right?

After careful examination, it appears that the issue here is the number of file descriptors rather than the number of open files. There is a difference between the two, as explained in this article: linux文件描述符open file descriptors与open files的区别-CSDN博客. You can use sysctl -a | grep file to check the return value, which should be 65536 as indicated in your logs. The modification method should involve editing the sysctl.conf file, or you can directly use the echo command to implement the change immediately: echo 6553560 > /proc/sys/fs/file-max.

655636 is the nofile value I configured earlier, which is why it appears. I also checked the configuration in /etc/sysctl.conf, and they are all set to 102400. I did not find any other places with the value 65536.

Oh, anyway, the deployment documentation does require these two. Please check them again. I’ll go to the test environment and try changing the parameters.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.