Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

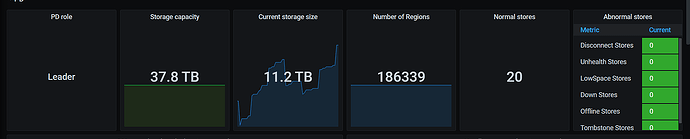

Original topic: tikv has too many regions

【TiDB Usage Environment】Production Environment

【TiDB Version】

【Reproduction Path】Inspection

【Encountered Problem: During inspection, it was found that tikv has too many regions】

【Resource Configuration】Enter TiDB Dashboard - Cluster Info - Hosts and take a screenshot of this page

【Attachment: Screenshot/Log/Monitoring】

mysql> select ITEM, SEVERITY, REFERENCE, VALUE, DETAILS from information_schema.INSPECTION_RESULT where ITEM like ‘region-count’;

±-------------±---------±----------±---------±-----------------------------------------------+

| ITEM | SEVERITY | REFERENCE | VALUE | DETAILS |

±-------------±---------±----------±---------±-----------------------------------------------+

| region-count | warning | <= 20000 | 28774.00 | 10.139.111.67:20161 tikv has too many regions |

| region-count | warning | <= 20000 | 28356.00 | 10.139.111.67:20160 tikv has too many regions |

| region-count | warning | <= 20000 | 28096.00 | 10.139.111.103:20163 tikv has too many regions |

| region-count | warning | <= 20000 | 28045.00 | 10.139.111.101:20161 tikv has too many regions |

| region-count | warning | <= 20000 | 28022.00 | 10.139.111.103:20161 tikv has too many regions |

| region-count | warning | <= 20000 | 28015.00 | 10.139.111.103:20162 tikv has too many regions |

| region-count | warning | <= 20000 | 28009.00 | 10.139.111.104:20162 tikv has too many regions |

| region-count | warning | <= 20000 | 27984.00 | 10.139.111.101:20160 tikv has too many regions |

| region-count | warning | <= 20000 | 27981.00 | 10.139.111.102:20163 tikv has too many regions |

| region-count | warning | <= 20000 | 27958.00 | 10.139.111.104:20160 tikv has too many regions |

| region-count | warning | <= 20000 | 27954.00 | 10.139.111.102:20160 tikv has too many regions |

| region-count | warning | <= 20000 | 27940.00 | 10.139.111.104:20163 tikv has too many regions |

| region-count | warning | <= 20000 | 27891.00 | 10.139.111.101:20163 tikv has too many regions |

| region-count | warning | <= 20000 | 27879.00 | 10.139.111.101:20162 tikv has too many regions |

| region-count | warning | <= 20000 | 27865.00 | 10.139.111.104:20161 tikv has too many regions |

| region-count | warning | <= 20000 | 27839.00 | 10.139.111.103:20160 tikv has too many regions |

| region-count | warning | <= 20000 | 27818.00 | 10.139.111.102:20161 tikv has too many regions |

| region-count | warning | <= 20000 | 27655.00 | 10.139.111.102:20162 tikv has too many regions |

| region-count | warning | <= 20000 | 27514.00 | 10.139.111.67:20162 tikv has too many regions |

| region-count | warning | <= 20000 | 27422.00 | 10.139.111.67:20163 tikv has too many regions |

±-------------±---------±----------±---------±-----------------------------------------------+

You can merge regions or increase the resources allocated to TiKV.

The warning can be ignored, right?

There are resources for scaling, but no resources to adjust monitoring thresholds. However, TiDB recommends that a single TiKV node should not exceed 20,000 regions, as exceeding this limit can lead to performance degradation.

Disk usage is less than 30%, do we still need to add resources?

Is there an official metric specification?

Ignore it but still need to understand why?

“If the CPU usage of Raftstore reaches above 85%, it can be considered as busy and has become a bottleneck.”

The document mentions abnormal situations, indicating that if there are too many regions, it may lead to high CPU load.

If no issues are found, I think it can be ignored.

How can I check the proportion of empty regions and small regions? I didn’t see it in the documentation.

Region and RocksDB

Although TiKV splits data into multiple Regions based on ranges, all Region data on the same node is still stored indiscriminately in the same RocksDB instance, while the logs required for Raft protocol replication are stored in another RocksDB instance. The reason for this design is that the performance of random I/O is much lower than that of sequential I/O, so TiKV uses the same RocksDB instance to store this data, allowing writes from different Regions to be merged into a single I/O operation.

Region and Raft Protocol

Regions and replicas maintain data consistency through the Raft protocol. Any write request can only be written on the Leader and must be written to the majority of replicas (the default configuration is 3 replicas, meaning all requests must be successfully written to at least two replicas) before returning a successful write to the client.

TiKV tries to keep the data stored in each Region at an appropriate size, currently defaulting to 96 MB, which is more conducive to PD’s scheduling decisions. When the size of a Region exceeds a certain limit (default is 144 MiB), TiKV will split it into two or more Regions. Similarly, when a Region becomes too small due to a large number of delete requests (default is 20 MiB), TiKV will merge two adjacent smaller Regions into one.

When PD needs to schedule a replica of a Region from one TiKV node to another, PD will first add a Learner replica for this Raft Group on the target node (although it will replicate the Leader’s data, it will not be counted in the majority of write requests). When the progress of this Learner replica roughly catches up with the Leader replica, the Leader will change it to a Follower, and then remove the Follower replica from the operating node, thus completing a replica scheduling of the Region.

The scheduling principle of the Leader replica is similar, but it requires a Leader Transfer after the Learner replica on the target node becomes a Follower replica, allowing the Follower to initiate an election to become the new Leader. After that, the new Leader is responsible for deleting the old Leader’s replica.

I understand this. How should I handle it if my query exceeds 20,000? Please provide the specific command to execute.

My clusters also have this warning. If it doesn’t affect cluster performance, it can be ignored. If you want to adjust the number of regions in a single TiKV, in version 7.1, the size of the region can be set through coprocessor.region-split-size. The recommended region sizes are 96 MiB, 128 MiB, and 256 MiB. The larger the region-split-size, the more likely performance jitter will occur. It is not recommended to set the region size to more than 1 GiB, and it is strongly advised not to exceed 10 GiB. If you are using TiFlash, the region size cannot exceed 256 MiB. If you are using the Dumpling tool, the region size cannot exceed 1 GiB. After increasing the region size, you need to reduce concurrency when using the Dumpling tool, otherwise, there is a risk of OOM in TiDB.

There is no performance impact for now. I suspect there are too many small regions, but is there any value that can be observed? How can I see the proportion of small regions? Because the monitoring shows empty regions, I just want to find the small regions.

In pd-ctl, you can directly use the region command to see all region information, but you need jq to format the JSON output for viewing.

A region is a logical concept representing a continuous key space, and its size and key quantity are estimated values. The region-split-keys and size are target values for the new regions after splitting, aiming to maintain a certain size, but they are not hard limits. Check out these two official documents:

https://docs.pingcap.com/zh/tidb/stable/tidb-storage

https://docs.pingcap.com/zh/tidb/stable/tidb-computing

Thank you, I will study it first.