Application environment: Production

Environment & Setup

- Kubernetes version: v1.23.6

- TiDB Operator version: v1.3.9

- TiDB/TiKV/PD version: v6.1.0

Reproduction method:

Problem:

- TiKV Pod (

tikv-1) fails to start.

- Logs show:

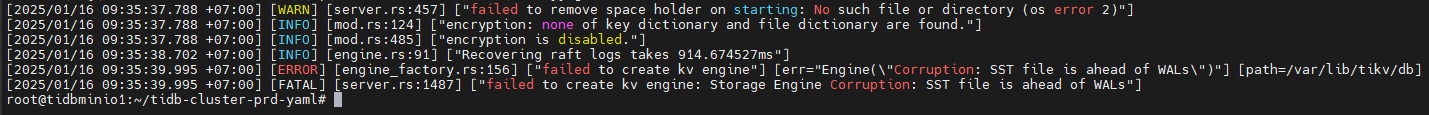

[2025/01/16 09:35:37.788 +07:00] [WARN] [server.rs:457] ["failed to remove space holder on starting: No such file or directory (os error 2)"]

[2025/01/16 09:35:37.788 +07:00] [INFO] [mod.rs:124] ["encryption: none of key dictionary and file dictionary are found."]

[2025/01/16 09:35:37.788 +07:00] [INFO] [mod.rs:485] ["encryption is disabled."]

[2025/01/16 09:35:38.702 +07:00] [INFO] [engine.rs:91] ["Recovering raft logs takes 914.674527ms"]

[2025/01/16 09:35:39.995 +07:00] [ERROR] [engine_factory.rs:156] ["failed to create kv engine"] [err="Engine(\"Corruption: SST file is ahead of WALs\")"] [path=/var/lib/tikv/db]

[2025/01/16 09:35:39.995 +07:00] [FATAL] [server.rs:1487] ["failed to create kv engine: Storage Engine Corruption: SST file is ahead of WALs"]

Resource allocation:

- PD requests: 4 CPU, 8Gi Memory, 50Gi Storage

- TiKV requests: 16 CPU, 48Gi Memory, 4096Gi Storage

- TiDB requests: 8 CPU, 16Gi Memory

- TiFlash requests: 8 CPU, 8–16Gi Memory, 500Gi Storage

Attachment:

The “SST file is ahead of WALs” error in TiKV can be attributed to several potential causes, particularly in version 6.1.0. This issue often indicates a mismatch between the state of SST files and Write Ahead Logs (WALs), which can lead to data corruption. Here are some likely causes and steps to resolve the issue:

Likely Causes:

- Data Corruption: This can occur if there is a failure in synchronizing WALs to disk before SST files are written. This might happen due to improper handling of disk writes or unexpected shutdowns.

- Configuration Errors: Misconfigurations in TiKV settings, such as incorrect WAL synchronization settings, can lead to this issue. For instance, if the

sync-log setting is not enabled, it might cause WALs to not be flushed to disk timely.

- Known Bugs: There might be specific bugs in TiKV version 6.1.0 that contribute to this problem. These bugs could affect the order of operations between WALs and SST files.

Steps to Resolve:

- Enable WAL Synchronization: Ensure that the

sync-log configuration is set to true to guarantee that WALs are flushed to disk before SST files are written. This setting helps maintain data integrity during unexpected shutdowns.

- Check Disk Health: Verify the health and performance of the storage disks. Slow or failing disks can cause delays in writing WALs, leading to this error.

- Upgrade TiKV: If the issue persists, consider upgrading to a newer version of TiKV where this bug might have been addressed. Check the release notes for any bug fixes related to WAL and SST synchronization.

- Review Logs and Metrics: Analyze TiKV logs and metrics to identify any anomalies or patterns that could indicate the root cause of the issue. This can provide insights into whether the problem is due to configuration, hardware, or software bugs.

- Consult Documentation and Support: Refer to the official TiKV documentation for any specific guidance on configuration settings. Additionally, reaching out to TiKV support or community forums can provide further assistance and potential workarounds.

For more detailed information, you can refer to the TiKV configuration documentation here.

So if you have 3 TiKV hosts where one is having issues the best is probably to add a new host, make sure everything is ok (all regions having 3 replicas) and then remove the problematic host.Also note that the latest v6.1.x is v6.1.7. It would be good to upgrade to the latest minor release once everything is healty. And as the latest LTS release is v8.5.0, you could also consider to upgrade to a newer major (LTS) release.

This might be the issue that you’re having: tikv failed at bootstrap complaining wal corruption · Issue #16705 · tikv/tikv · GitHub The release notes show that this is fixed in 6.5.9, 7.1.5, 7.5.2 and 8.1.0. However the release notes don’t show any information for v6.1.

1 Like