Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: tispak 本地使用远程服务链接不上。

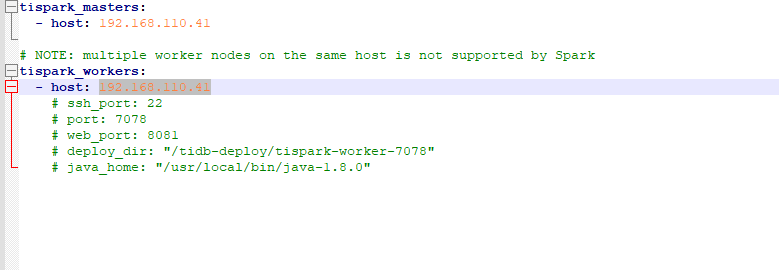

tispark SparkSession

Deploying the server, it can be used, but it cannot connect locally:

Server error:

23/06/25 16:56:06 INFO CoarseGrainedExecutorBackend: Started daemon with process name: 22262@k8s-master

23/06/25 16:56:06 INFO SignalUtils: Registered signal handler for TERM

23/06/25 16:56:06 INFO SignalUtils: Registered signal handler for HUP

23/06/25 16:56:06 INFO SignalUtils: Registered signal handler for INT

23/06/25 16:56:07 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

23/06/25 16:56:07 INFO SecurityManager: Changing view acls to: root

23/06/25 16:56:07 INFO SecurityManager: Changing modify acls to: root

23/06/25 16:56:07 INFO SecurityManager: Changing view acls groups to:

23/06/25 16:56:07 INFO SecurityManager: Changing modify acls groups to:

23/06/25 16:56:07 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

Exception in thread “main” java.lang.reflect.UndeclaredThrowableException

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1713)

at org.apache.spark.deploy.SparkHadoopUtil.runAsSparkUser(SparkHadoopUtil.scala:64)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$.run(CoarseGrainedExecutorBackend.scala:188)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$.main(CoarseGrainedExecutorBackend.scala:281)

at org.apache.spark.executor.CoarseGrainedExecutorBackend.main(CoarseGrainedExecutorBackend.scala)

Caused by: org.apache.spark.SparkException: Exception thrown in awaitResult:

at org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:226)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:75)

at org.apache.spark.rpc.RpcEnv.setupEndpointRefByURI(RpcEnv.scala:101)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$$anonfun$run$1.apply$mcV$sp(CoarseGrainedExecutorBackend.scala:201)

at org.apache.spark.deploy.SparkHadoopUtil$$anon$2.run(SparkHadoopUtil.scala:65)

at org.apache.spark.deploy.SparkHadoopUtil$$anon$2.run(SparkHadoopUtil.scala:64)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

… 4 more

Caused by: java.io.IOException: Failed to connect to /192.168.110.156:42474

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:245)

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:187)

at org.apache.spark.rpc.netty.NettyRpcEnv.createClient(NettyRpcEnv.scala:198)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:194)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:190)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

Caused by: io.netty.channel.AbstractChannel$AnnotatedNoRouteToHostException: No route to host: /192.168.110.156:42474

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:716)

at io.netty.channel.socket.nio.NioSocketChannel.doFinishConnect(NioSocketChannel.java:323)

at io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:340)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:633)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:580)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:497)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:459)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

… 1 more

Caused by: java.net.NoRouteToHostException: No route to host

… 11 more

Load New

Local log: continuously outputting this log

[ INFO ] [2023-06-25 17:32:01] org.apache.spark.storage.BlockManagerMaster [54] - Removal of executor 1495 requested

[ INFO ] [2023-06-25 17:32:01] org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend$DriverEndpoint [54] - Asked to remove non-existent executor 1495

[ INFO ] [2023-06-25 17:32:01] org.apache.spark.storage.BlockManagerMasterEndpoint [54] - Trying to remove executor 1495 from BlockManagerMaster.

[ INFO ] [2023-06-25 17:32:01] org.apache.spark.scheduler.cluster.StandaloneSchedulerBackend [54] - Granted executor ID app-20230625164714-0002/1496 on hostPort 192.168.110.41:7078 with 6 core(s), 1024.0 MB RAM

[ INFO ] [2023-06-25 17:32:01] org.apache.spark.deploy.client.StandaloneAppClient$ClientEndpoint [54] - Executor updated: app-20230625164714-0002/1496 is now RUNNING