Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 15分中了解TIDB

Introduction

TiDB is a distributed database that can be easily migrated from MySQL. Here are some features of TiDB:

- MySQL clusters that have been sharded can be migrated to TiDB in real-time using TiDB tools without modifying the code.

- TiDB supports horizontal elastic scaling; simply add new nodes to expand throughput or storage, easily handling high concurrency and massive data scenarios.

- TiDB 100% supports standard ACID transactions, making it a reliable solution for distributed transactions.

- The majority election protocol based on Raft provides financial-grade 100% data consistency guarantees and achieves automatic failover without manual intervention.

- TiDB, as a typical OLTP row storage database, also has powerful OLAP performance. Combined with TiSpark, it provides a one-stop HTAP solution, handling OLTP & OLAP with a single storage, eliminating the traditional cumbersome ETL process.

- TiDB is designed for the cloud, deeply integrated with Kubernetes, supporting public cloud, private cloud, and hybrid cloud, making deployment, configuration, and maintenance very simple. TiDB is non-intrusive to business, elegantly replacing traditional database middleware and sharding solutions. It also allows developers and operations personnel to focus on business development without worrying about database scaling details, greatly enhancing productivity.

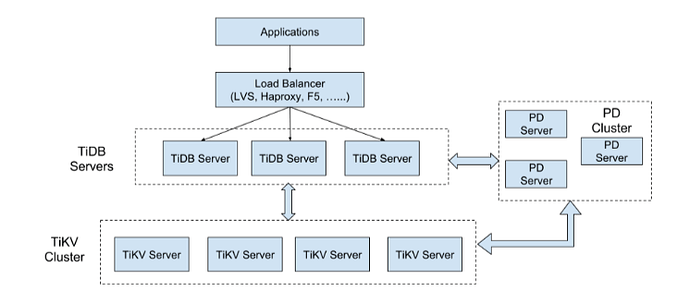

Overall Architecture

Three Components

TiDB Server

TiDB Server is stateless and does not store data itself; it is responsible for computation. It can scale horizontally without limits and can provide a unified access address through load balancing components (such as LVS, HAProxy, or F5). TiDB Server interacts with TiKV to fetch data and returns the results.

PD Server

Placement Driver (PD) is the management module of the entire cluster. Its main tasks are: storing cluster metadata (which TiKV node stores a particular key), scheduling and load balancing the TiKV cluster (such as data migration, Raft group leader migration), and assigning globally unique and incrementing transaction IDs. PD is a cluster and requires an odd number of nodes. It is generally recommended to deploy at least 3 nodes in production.

TiKV Server

TiKV Server is responsible for storing data. Externally, TiKV is a distributed transactional key-value storage engine. The basic unit of data storage is the Region, each responsible for storing data within a key range (from StartKey to EndKey, left-closed and right-open interval). Each TiKV node manages multiple Regions. TiKV uses the Raft protocol for replication, ensuring data consistency and disaster recovery. Replicas are managed at the Region level, with multiple Regions on different nodes forming a Raft Group. Data load balancing across multiple TiKV nodes is scheduled by PD, also at the Region level.

Core Features

Unlimited Horizontal Scalability

Unlimited horizontal scalability is a major feature of TiDB, encompassing both computing and storage capabilities. TiDB Server handles SQL requests, and as business grows, more TiDB Server nodes can be added to increase overall processing power and throughput. TiKV handles data storage, and as data volume grows, more TiKV Server nodes can be deployed to address data scaling issues. PD schedules data migration between TiKV nodes at the Region level. In the early stages of business, a minimal number of service instances can be deployed (at least 3 TiKV, 3 PD, and 2 TiDB are recommended). As business volume grows, TiKV or TiDB instances can be added as needed.

High Availability

High availability is another major feature of TiDB. All three components (TiDB/TiKV/PD) can tolerate partial instance failures without affecting the overall cluster availability. Here’s a breakdown of the availability, consequences of single instance failure, and recovery methods for each component:

- TiDB: TiDB is stateless, and it is recommended to deploy at least two instances. The front end provides services through a load balancing component. When a single instance fails, it affects the sessions on that instance. From the application’s perspective, a single request failure occurs. Reconnecting will restore service. After a single instance fails, it can be restarted or a new instance can be deployed.

- PD: PD is a cluster that maintains data consistency through the Raft protocol. When a single instance fails, if it is not the Raft leader, the service is unaffected; if it is the Raft leader, a new Raft leader will be elected, and service will automatically recover. PD cannot provide services during the election process, which takes about 3 seconds. It is recommended to deploy at least two PD instances. After a single instance fails, it can be restarted or a new instance can be added.

- TiKV: TiKV is a cluster that maintains data consistency through the Raft protocol (the number of replicas is configurable, with three replicas by default) and load balancing through PD. When a single node fails, it affects all Regions stored on that node. For Leader nodes in the Region, service is interrupted, waiting for re-election; for Follower nodes in the Region, service is unaffected. If a TiKV node fails and cannot recover within a certain period (default 30 minutes), PD will migrate the data to other TiKV nodes.

TiDB Technical Insights

- Data Storage: TiDB Technical Insights - On Storage

- Computation (critical for SQL operations): TiDB Technical Insights - On Computation

- Scheduling (TiDB Cluster Management): TiDB Technical Insights - On Scheduling

Installation and Deployment

TiDB installation and deployment can be somewhat complex. Follow the steps carefully. If your company has dedicated operations personnel, they can handle this task. However, most small and medium-sized companies do not have dedicated operations staff, and developers often handle operations tasks. Therefore, it is beneficial for developers to understand the process.

- Deployment Guide: Building a TiDB Cluster from Scratch https://link.zhihu.com/?target=https%3A//blog.csdn.net/D_Guco/article/details/94746802

Disclaimer

The above is a simple compilation of TiDB information and a basic understanding of TiDB. For more detailed information, refer to the official TiDB documentation. Pay attention to the common issues and solutions section, which is very useful: PingCAP TiDB Official Documentation

Original text: https://blog.csdn.net/D_Guco/ar