Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tidb集群从v5.4.0升级到v6.5.0,主库集群check检查正常,从库集群检查报错:Regions are not fully healthy: 17061 miss-peer

[TiDB Usage Environment] Pre-production environment

[TiDB Version] Upgraded from v.5.4.0 to v6.5.0

[Reproduction Path] Currently, both the master and slave clusters have been upgraded to version v6.5.0. The check command executed on the master cluster returns normal results. However, an error occurs when checking the slave cluster: Regions are not fully healthy: 17061 miss-peer

[Encountered Problem: Phenomenon and Impact]

Master cluster, execute the following command:

# tiup cluster check pre-tidb-cluster-01 --cluster

Checking region status of the cluster pre-tidb-cluster-01...

All regions are healthy.

Slave cluster, execute the following command:

# tiup cluster check test-tidb-cluster-01 --cluster

Checking region status of the cluster test-tidb-cluster-01...

Regions are not fully healthy: 17061 miss-peer

Please fix unhealthy regions before other operations.

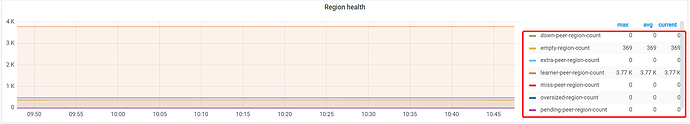

Grafana monitoring of PD Region health in the master cluster:

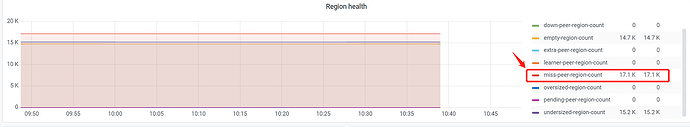

Grafana monitoring of PD Region health in the slave cluster:

[Resource Configuration]

[Attachments: Screenshots/Logs/Monitoring]

How to solve this problem? Do we need to fix those unhealthy Regions?

Two steps: find the region and remove the region.

There is another operational scenario that has not been mentioned:

- The primary cluster upgrade operation time was last Friday.

- The secondary cluster upgrade operation time was this Monday.

- The primary and secondary cluster upgrades took about 3 days.

I don’t know if this is related, causing the region miss.

Doesn’t the region lose data?

Here is the manual for finding and removing problematic regions.

What does master-slave mean? Did you implement master-slave in TiDB?

Solution from @xfworld

Then don’t upgrade for now.

- Check if there are any regions with missing replicas in the existing cluster.

- If these regions with missing replicas are not important, consider manually removing them.

- Once the cluster status is normal, upgrade to 6.5.0.

Operation reference:

https://docs.pingcap.com/zh/tidb/stable/pd-control#region-check-miss-peer–extra-peer–down-peer–pending-peer–offline-peer–empty-region–hist-size–hist-keys

Removal method reference:

https://docs.pingcap.com/zh/tidb/stable/pd-control#恢复数据时寻找相关-region

A small amount of data may be lost.

It is recommended to observe, as the missing peer will be automatically replenished. Multiple replica losses will need to be handled manually.

PS: Was the missing peer caused by the upgrade? Or was there a missing peer before the upgrade?

Does the replica handle business operations? How large is the data volume?

Master-slave architecture: If the master is fine but the slave database loses data, you can consider rebuilding the slave database. This is feasible if the slave database does not handle any business operations.

Please provide the details of your upgrade process. Let’s see if the issue lies in the process.

I think the key is to find out why the replica lost data and what the differences are between the primary and replica databases.

Yes, you can look into it more deeply. Maybe you’ll find a bug. Improve your skills!

Strongly agree with the expert’s opinion, making progress every day.

If this were to automatically recover, it should have been completed a long time ago.

Yes, two TiDB clusters are set up with master-slave data synchronization through TiCDC, achieving read-write separation. OLTP operations are completed in the primary cluster, while OLAP report queries are handled in the secondary cluster.

Why not consider TiFlash in your architecture? Does your company have any special requirements?