Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 紧急求救 ,tikv 强制下线后 tidb 启动失败,还是会不断的链接下线的tikv ,各位大佬有遇到的么

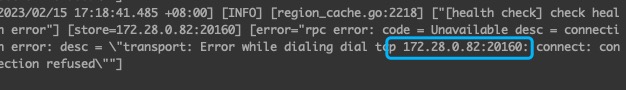

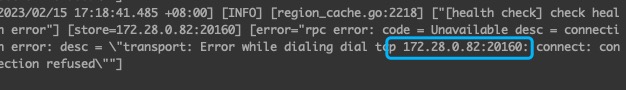

After forcibly taking a node offline, TiDB fails to start, and the logs show that it continuously tries to connect to the offline node. How can this be fixed?

TiDB startup failure log:

What is the current status of the cluster?

The image you provided is not visible. Please provide the text you need translated.

It may take a while, probably not finished going offline yet. Because it is asynchronous.

The TiDB node shouldn’t be down. Check the TiDB node logs. The log you posted isn’t a fatal error, right? It shouldn’t cause an exit because of that log.

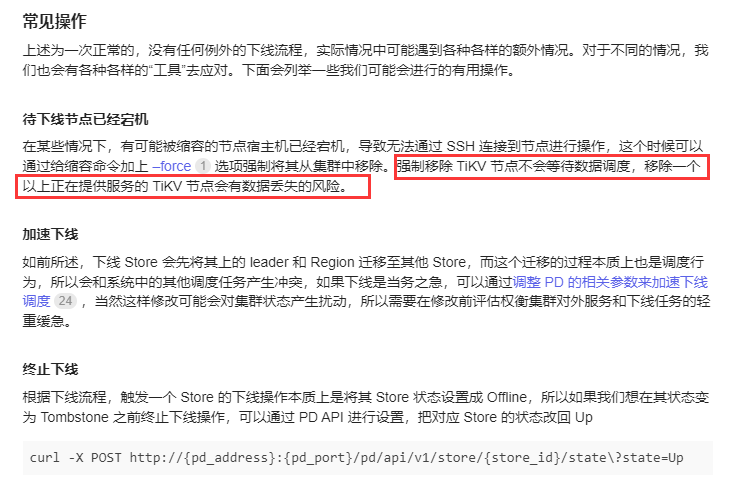

You probably encountered this situation. In your screenshot, one of the TiKV statuses is Pending Offline.

You can refer to this SOP:

I am now trying to scale out the previously decommissioned node, but it prompts me that there is a node information conflict and does not allow the installation.

Where can I delete that part of the metadata in PD?

Is it a production environment? Don’t operate like this. Wait until the cluster is successfully taken offline.

Do you only have 3 TiKV nodes? Then you can’t scale down, right? Won’t it crash? You can’t ensure three replicas!

The TiKV replicas cannot meet the requirement of 3 anymore.

After scaling down on the same node, use a different port when scaling up.

Has the downtime ended after the whole night?

The multi-replica majority protocol of TiKV ensures that forcibly shutting down one replica will not affect the task service, meaning it can still provide normal service. This clearly indicates an operational issue, right?

TiDB can achieve load balancing and automatic failover. Additionally, it is stateless.

Please send the command you use to force logout. I want to try forcing logout on my test cluster.

Find the store ID of the offline node, then use pd-ctl store cancel-delete .

I have a post, you can take a look. To find the store ID of the offline node, each TiKV instance corresponds to a store_id. Then use pd-ctl store delete to remove this store_id.

You can use pd-ctl related commands. If you are sure that you don’t need this TiKV node anymore, use the unsafe command to delete it.

The delete command might indicate successful deletion, but it may not actually delete it.