Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 通过kettle等工具,进行大批量数据迁移,写入到Tidb集群里,涉及tiflash加载的大表,出现2个tiflash双节点的服务全部down掉的问题。

[TiDB Usage Environment] Production Environment

[TiDB Version] v6.5.0

[Reproduction Path] Operations performed that led to the issue

[Encountered Issue: Issue Phenomenon and Impact] In the v6.5.0 TiDB cluster version, using tools like Kettle for large-scale data migration and writing into the TiDB cluster, involving large tables loaded into TiFlash. This results in both TiFlash dual-node services going down.

For this issue, which parameters in the TiFlash configuration can be controlled to avoid this problem?

[Resource Configuration]

[Attachments: Screenshots/Logs/Monitoring]

What is the reason for the downtime? Are there any error descriptions in the logs?

How is the TiFlash configuration?

At that time, this was the error log message when TiFlash encountered an issue and went down.

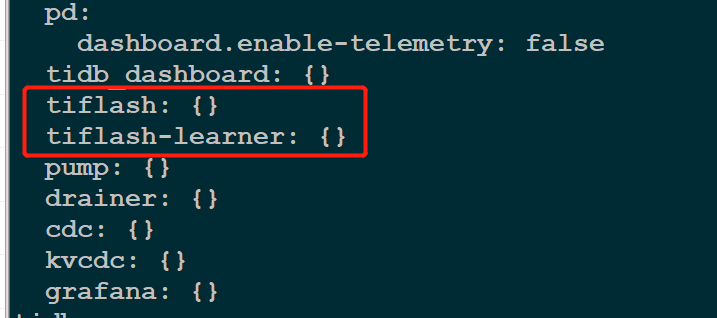

Using the default configuration of the TiDB cluster:

The question is about hardware configuration…

Seriously, you were given a Q&A template, and you didn’t even fill it out completely…

These are just errors, not the cause of being killed…

First, check whether TiFlash is mixed with TiKV and other nodes, then check the resource status of the TiFlash host, and then see if the synchronization speed parameters above have been adjusted. You can try to adjust them to be smaller.

In TiDB v6.5.0, you can avoid the issue of TiFlash service going down by adjusting TiFlash’s configuration parameters. Specifically, you can consider adjusting the following parameters:

tikv-client.grpc-connection-count controls the number of connections between TiFlash and TiKV. By default, TiFlash creates 4 connections for each TiKV node. If your TiKV cluster is relatively large, you may need to increase this parameter so that TiFlash can fetch data more quickly. You can add the following configuration to TiFlash’s config.toml file:

[tikv-client]

grpc-connection-count = 16

This will create 16 connections for each TiKV node.

max-server-connections controls the number of connections between TiFlash and clients. By default, TiFlash creates 1024 connections for each client. If you have a large number of clients, you may need to increase this parameter so that TiFlash can handle requests better. You can add the following configuration to TiFlash’s config.toml file:

[server]

max-server-connections = 4096

This will create 4096 connections for each client.

max-background-compactions controls the number of background compaction tasks executed by TiFlash. By default, TiFlash executes 4 compaction tasks simultaneously. If your TiFlash node is relatively powerful, you can increase this parameter so that TiFlash can complete compaction tasks more quickly. You can add the following configuration to TiFlash’s config.toml file:

[rocksdb]

max-background-compactions = 8

This will execute 8 compaction tasks simultaneously.

Be sure to pay attention to resource allocation issues. If resources are insufficient, it can also cause downtime problems.

There are several configuration parameters in TiFlash that can be adjusted to help prevent service downtime:

-

max-merge-region-size: This parameter controls the maximum size of regions that TiFlash can merge during compaction. By default, the maximum size is set to 1GB. If TiFlash’s compaction tasks are overloaded, you can increase this parameter to allow TiFlash to merge larger regions, reducing the total number of compaction tasks.

-

max-background-compactions: This parameter controls the maximum number of concurrent background compaction tasks that TiFlash can run. By default, the maximum number of tasks is set to 4. If TiFlash has overloaded compaction tasks, you can increase this parameter to allow TiFlash to run more concurrent tasks.

-

max-table-size: This parameter controls the maximum size of a single table that can be loaded into TiFlash. By default, the maximum size is set to 512GB. If you are loading large tables into TiFlash and encounter service downtime, you can reduce this parameter to limit the size of each table, reducing the impact on TiFlash.

-

max-server-memory-usage: This parameter controls the maximum amount of memory that TiFlash can use on each node. By default, the maximum memory usage is set to 80% of the total available memory on the node. If TiFlash is running out of memory and causing service downtime, you can reduce this parameter to limit the amount of memory TiFlash can use, preventing the node from being overwhelmed.

It is also important to monitor TiFlash’s performance and adjust these parameters as needed to maintain optimal performance and prevent service downtime. TiDB provides a range of monitoring and diagnostic tools, including TiDB Dashboard and TiUP, which can be used to monitor the health and performance of the TiFlash cluster and make configuration adjustments as needed.

I have tried using FlinkX ETL for bulk writing, and version 6.5 is much better.

It is feasible to adjust through parameters as mentioned by the previous commenters.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.