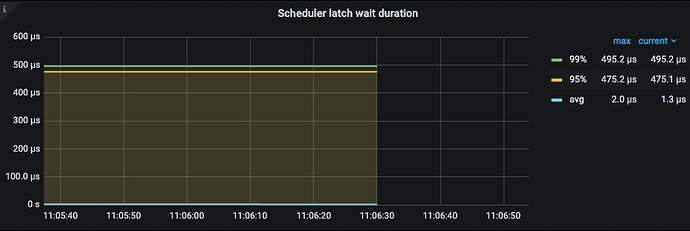

The latch in TiKV is mainly used for concurrency control in the TiKV transaction layer. The specific locking object is the key object in the TiKV transaction request, preventing multiple concurrent operations on the same key.

To quickly understand, you can read the test cases at the end of this file for a simple understanding of this latch.

TiKV itself supports the ACID transaction model externally, and all transaction interfaces must ensure their atomicity. The transaction implementation of TiKV is based on the underlying raftstore. Currently, the atomic operations that raftstore can provide are only two:

- Snapshot level reading

- Batch writing

TiKV needs to implement the atomicity of transaction interfaces based on the above interfaces, and most transaction interfaces require:

- Read, check feasibility

- Batch writing

To ensure its atomicity, TiKV uses in-memory locks (latches) to solve this problem.

Here is a simple introduction to an interface in the transaction model as an example:

prewrite(keys): The prewrite of optimistic locking. After TiKV receives this request, it will check and physically lock all keys. After this request is successful, any other client attempting to lock any of the already locked keys will fail.

Assume:

client1 prewrite(k1, k2, k3)

client2 prewrite(k3, k5)

To ensure the atomicity of prewrite, when receiving the request from client1, latches are used to lock k1, k2, and k3 until the entire request is processed before returning. When receiving the request from client2, it attempts to use latches to lock k3 and k5. At this time, it finds that k3 is already locked, so client2 registers in the latches for k3 and queues up. Only after the lock on k3 is released will client2 be reawakened to continue processing.