Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: DM拉取binlog时候,会自动填充一些内容,导致任务一直hang住

Version: 6.1.1

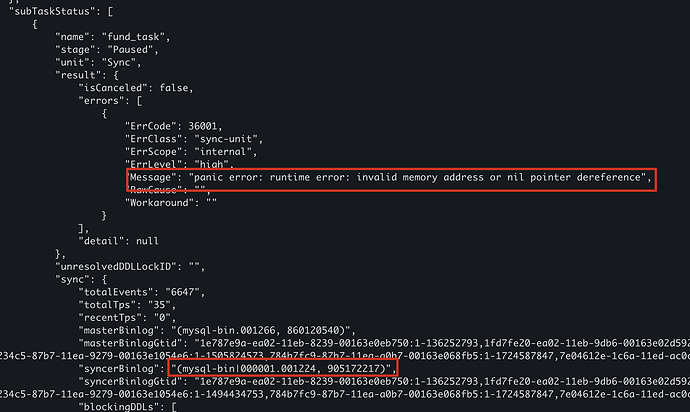

Phenomenon:

As shown in the figure below, the task keeps reporting runtime error: invalid memory address or nil pointer dereference. This error will automatically retry, but it keeps reporting the same issue.

Reason:

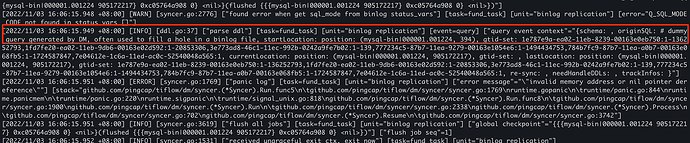

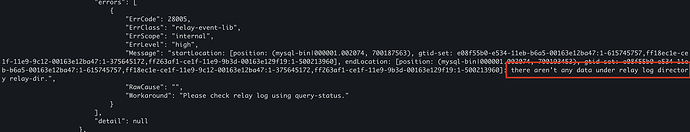

Looking at the dm-worker log file, you can see errors being reported. Although the task has synchronized to "syncerBinlog": "(mysql-bin|000001.001224, 905172217)", an error occurs when parsing the binlog file because DM cannot recognize some content it filled in itself.

[2022/11/03 16:06:15.948 +08:00] [WARN] [syncer.go:2776] ["found error when get sql_mode from binlog status_vars"] [task=fund_task] [unit="binlog replication"] [error="Q_SQL_MODE_CODE not found in status_vars []"]

[2022/11/03 16:06:15.949 +08:00] [INFO] [ddl.go:37] ["parse ddl"] [task=fund_task] [unit="binlog replication"] [event=query] ["query event context"="{schema: , originSQL: # dummy query generated by DM, often used to fill a hole in a binlog file, startLocation: position: (mysql-bin|000001.001224, 394), gtid-set: 1e787e9a-ea02-11eb-8239-00163e0eb750:1-136252793,1fd7fe20-ea02-11eb-9db6-00163e02d592:1-20853306,3e773ad8-46c1-11ec-992b-0242a9fe7b02:1-139,777234c5-87b7-11ea-9279-00163e1054e6:1-1494434753,784b7fc9-87b7-11ea-a0b7-00163e068fb5:1-1724587847,7e04612e-1c6a-11ed-ac0c-52540048a565:1, currentLocation: position: (mysql-bin|000001.001224, 905172217), gtid-set: , lastLocation: position: (mysql-bin|000001.001224, 905172217), gtid-set: 1e787e9a-ea02-11eb-8239-00163e0eb750:1-136252793,1fd7fe20-ea02-11eb-9db6-00163e02d592:1-20853306,3e773ad8-46c1-11ec-992b-0242a9fe7b02:1-139,777234c5-87b7-11ea-9279-00163e1054e6:1-1494434753,784b7fc9-87b7-11ea-a0b7-00163e068fb5:1-1724587847,7e04612e-1c6a-11ed-ac0c-52540048a565:1, re-sync: , needHandleDDLs: , trackInfos: }"]

[2022/11/03 16:06:15.951 +08:00] [ERROR] [syncer.go:1769] ["panic log"] [task=fund_task] [unit="binlog replication"] ["error message"="\"invalid memory address or nil pointer dereference\""] [stack="github.com/pingcap/tiflow/dm/syncer.(*Syncer).Run.func5\n\tgithub.com/pingcap/tiflow/dm/syncer/syncer.go:1769\nruntime.gopanic\n\truntime/panic.go:844\nruntime.panicmem\n\truntime/panic.go:220\nruntime.sigpanic\n\truntime/signal_unix.go:818\ngithub.com/pingcap/tiflow/dm/syncer.(*Syncer).Run.func8\n\tgithub.com/pingcap/tiflow/dm/syncer/syncer.go:1900\ngithub.com/pingcap/tiflow/dm/syncer.(*Syncer).Run\n\tgithub.com/pingcap/tiflow/dm/syncer/syncer.go:2338\ngithub.com/pingcap/tiflow/dm/syncer.(*Syncer).Process\n\tgithub.com/pingcap/tiflow/dm/syncer/syncer.go:702\ngithub.com/pingcap/tiflow/dm/syncer.(*Syncer).Resume\n\tgithub.com/pingcap/tiflow/dm/syncer/syncer.go:3742"]

[2022/11/03 16:06:15.951 +08:00] [INFO] [syncer.go:3619] ["flush all jobs"] [task=fund_task] [unit="binlog replication"] ["global checkpoint"="{{{mysql-bin|000001.001224 905172217} 0xc05764a908 0} <nil>}(flushed {{{mysql-bin|000001.001224 905172217} 0xc05764a908 0} <nil>})"] ["flush job seq"=1]

[2022/11/03 16:06:15.952 +08:00] [INFO] [syncer.go:1531] ["received ungraceful exit ctx, exit now"] [task=fund_task] [unit="binlog replication"]

[2022/11/03 16:06:15.953 +08:00] [INFO] [subtask.go:356] ["unit process returned"] [subtask=fund_task] [unit=Sync] [stage=Paused] [status="{\"totalEvents\":6627,\"totalTps\":1656,\"syncerBinlog\":\"(mysql-bin|000001.001224, 905172217)\",\"syncerBinlogGtid\":\"1e787e9a-ea02-11eb-8239-00163e0eb750:1-136252793,1fd7fe20-ea02-11eb-9db6-00163e02d592:1-20853306,3e773ad8-46c1-11ec-992b-0242a9fe7b02:1-139,777234c5-87b7-11ea-9279-00163e1054e6:1-1494434753,784b7fc9-87b7-11ea-a0b7-00163e068fb5:1-1724587847,7e04612e-1c6a-11ed-ac0c-52540048a565:1\",\"binlogType\":\"local\"}"]

[2022/11/03 16:06:15.953 +08:00] [INFO] [local_reader.go:782] ["binlog reader closing"] [task=fund_task] [unit="binlog replication"] [component="binlog reader"]

[2022/11/03 16:06:15.953 +08:00] [WARN] [local_reader.go:288] ["parse relay finished"] [task=fund_task] [unit="binlog replication"] [component="binlog reader"] [error="parse relay log file mysql-bin.001224 from offset 4 in dir relay_log/dm-172.30.241.143-18262/7e04612e-1c6a-11ed-ac0c-52540048a565.000001: context canceled"]

[2022/11/03 16:06:15.953 +08:00] [INFO] [local_reader.go:788] ["binlog reader closed"] [task=fund_task] [unit="binlog replication"] [component="binlog reader"]

[2022/11/03 16:06:16.031 +08:00] [ERROR] [subtask.go:377] ["unit process error"] [subtask=fund_task] [unit=Sync] ["error information"="ErrCode:36001 ErrClass:\"sync-unit\" ErrScope:\"internal\" ErrLevel:\"high\" Message:\"panic error: runtime error: invalid memory address or nil pointer dereference\" "]

[2022/11/03 16:06:16.031 +08:00] [INFO] [subtask.go:379] [paused] [subtask=fund_task] [unit=Sync]

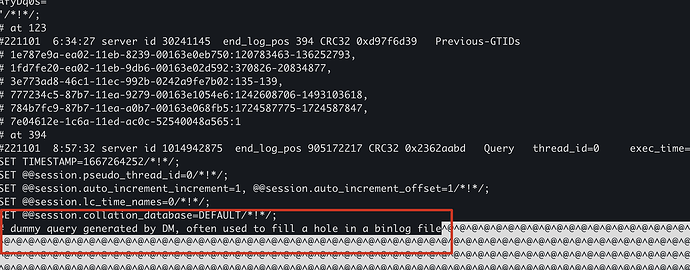

It should be related to the binlog file format. When parsing the binlog file, it is indeed found that DM filled some zeros into the binlog file.