Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 为何关闭dynamic-level-bytes后,TiKV的compaction顺序还是不发生变化?

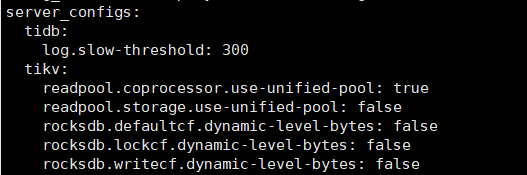

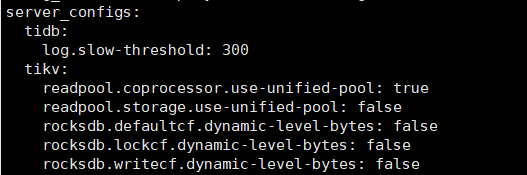

[TiDB Usage Environment] Test

[TiDB Version] TiDB 5.3

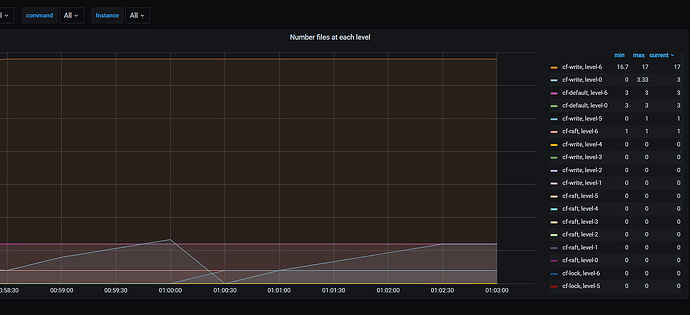

[Problem Encountered] After setting the dynamic-level-bytes of the three CFs of TiKV to false via tiup cluster edit-config, why does the data still flush to L6 first after triggering compaction at L0 when continuously inserting data, and then move to L5 after the L6 files reach a certain size? I originally thought it would revert to the L1~L6 sequence. Reference:

[Reproduction Path] Operations performed that led to the problem

[Problem Phenomenon and Impact]

[Attachments]

Please provide the version information of each component, such as cdc/tikv, which can be obtained by executing cdc version/tikv-server --version.

ingest sst will add the SST to the bottommost level if there is no overlap with existing SSTs, level 6 by default. We use ingest sst to replicate regions’ peers, so the LSM structure may look like this:

L0 SSTs

L1 SSTs

L2 SSTs

L3~L5 is empty

L6 SSTs (ingested by replicating)

When users delete these data contained in SSTs at level 6, these delete marks are hard to reach level 6. So the disk space occupied by these SSTs is hard to reclaim.

When dynamic_level_bytes is on, for an empty DB, RocksDB makes the last level the base level, which means merging L0 data into the last level, until it exceeds max_bytes_for_level_base. And then RocksDB makes the second last level the base level, and so on.

For the above case, if dynamic_level_bytes is on, the LSM structure is like this:

L0 SSTs

L5 SSTs

L6 SSTs

The delete marks will be compacted from L0 to L5, from L5 to L6. The relevant space occupied by these keys will be freed.

It is not recommended to change this value on a DB that is not empty. If you really want to change this value, please use pd-ctl to compact the whole DB first.

$./bin/tikv-ctl --host={$tikv-ip}:{$tikv-port} compact -c write

$./bin/tikv-ctl --host={$tikv-ip}:{$tikv-port} compact -c default

Hello, it seems that the issue is still due to dynamic_level_bytes being enabled according to this document. However, I have already set this configuration to false, but the problem of only having data stored in L0, L5, and L6 still exists. Is it because I didn’t perform a compact first?

Based on the description, it seems to mean that the best way is to set it during cluster initialization.

Thank you for your response! I’ll give it a try.

Is this excerpt from the RocksDB documentation?

Description in the TiKV issue

I also conducted a test, you can refer to it

Thank you! Very detailed.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.