Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: explain analyze 结果中父算子的time比子算子的time要小,为什么?

[TiDB Usage Environment] Test

[TiDB Version]

[Reproduction Path] When doing explain analyze, it was found that the execution time of some sub-operators Selection/IndexRangeScan [cop(tikv)] in the plan is longer than the time of the parent operator (IndexReader)? Why is this? Most other execution plans satisfy the condition that the parent operator time >= sub-operator time.

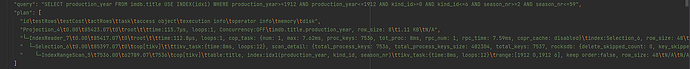

“query”: “SELECT production_year FROM imdb.title USE INDEX(idx1) WHERE production_year>=1912 AND production_year<=1912 AND kind_id>=0 AND kind_id<=6 AND season_nr>=2 AND season_nr<=59”,

“plan”: [

“id\testRows\testCost\tactRows\ttask\taccess object\texecution info\toperator info\tmemory\tdisk”,

“Projection_4\t0.00\t85423.07\t0\troot\t\ttime:115.7µs, loops:1, Concurrency:OFF\timdb.title.production_year, row_size: 8\t1.11 KB\tN/A”,

“└─IndexReader_7\t0.00\t85417.07\t0\troot\t\ttime:112.8µs, loops:1, cop_task: {num: 1, max: 7.62ms, proc_keys: 7536, tot_proc: 8ms, rpc_num: 1, rpc_time: 7.59ms, copr_cache: disabled}\tindex:Selection_6, row_size: 48\t232 Bytes\tN/A”,

" └─Selection_6\t0.00\t85397.07\t0\tcop[tikv]\t\ttikv_task:{time:8ms, loops:12}, scan_detail: {total_process_keys: 7536, total_process_keys_size: 482304, total_keys: 7537, rocksdb: {delete_skipped_count: 0, key_skipped_count: 7536, block: {cache_hit_count: 12, read_count: 0, read_byte: 0 Bytes}}}\tge(imdb.title.season_nr, 2), le(imdb.title.season_nr, 59), row_size: 48\tN/A\tN/A",

" └─IndexRangeScan_5\t7536.00\t62789.07\t7536\tcop[tikv]\ttable:title, index:idx1(production_year, kind_id, season_nr)\ttikv_task:{time:8ms, loops:12}\trange:[1912 0,1912 6], keep order:false, row_size: 48\tN/A\tN/A"

]

[Encountered Problem: Problem Phenomenon and Impact]

[Resource Configuration] Go to TiDB Dashboard - Cluster Info - Hosts and take a screenshot of this page

[Attachment: Screenshot/Log/Monitoring]