Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 为啥压测发现TiKV v7.5.1的写入性能比TiKV v4.02的写入性能还低?

[TiDB Usage Environment] Test

[TiDB Version] v7.5.1 and v4.0.2

Hardware Configuration

3 TiKV nodes

Model: Tencent Cloud IT5.8XLARGE128

CPU: 32-core Intel(R) Xeon(R) Platinum 8255C CPU @ 2.50GHz

Memory: 64G

Size of each KV data: 1KB

Cluster Configuration

TiKV v7.5.1

# TiKV v7.5.1

global:

user: "tidb"

ssh_port: 32200

deploy_dir: "/data2/tidb-deploy"

data_dir: "/data2/tidb-data"

listen_host: 0.0.0.0

arch: "amd64"

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

server_configs:

tidb:

store: tikv

log.slow-threshold: 300

binlog.enable: false

binlog.ignore-error: false

log.slow-query-file: "/data2/logs/tidb-slow.log"

performance.stats-lease: "60s"

tikv:

log.file.max-days: 1

server.labels: { zone: "test", host: "10.5.4.2" }

server.grpc-concurrency: 8

server.grpc-raft-conn-num: 20

storage.scheduler-worker-pool-size: 12

storage.block-cache.capacity: "15GB"

raftstore.sync-log: true

raftstore.split-region-check-tick-interval: "30s"

raftstore.raft-election-timeout-ticks: 20

raftstore.raft-store-max-leader-lease: "19s"

raftstore.raft-max-inflight-msgs: 512

raftstore.apply-pool-size: 4

raftstore.store-pool-size: 6

raftstore.raftdb-path: "/data3/tidb-data/tikv/db"

raftstore.wal-dir: "/data3/tidb-data/tikv/wal"

rocksdb.max-background-jobs: 12

rocksdb.compaction-readahead-size: "4MB"

rocksdb.defaultcf.block-size: "4KB"

rocksdb.defaultcf.target-file-size-base: "32MB"

rocksdb.writecf.target-file-size-base: "32MB"

rocksdb.lockcf.target-file-size-base: "32MB"

raftdb.compaction-readahead-size: "4MB"

raftdb.defaultcf.block-size: "64KB"

raftdb.defaultcf.target-file-size-base: "32MB"

readpool.storage.use-unified-pool: false

readpool.coprocessor.use-unified-pool: true

readpool.storage.high-concurrency: 16

readpool.storage.normal-concurrency: 16

readpool.storage.low-concurrency: 16

pd:

log.max-days: 1

schedule.max-merge-region-size: 20

schedule.max-merge-region-keys: 200000

schedule.split-merge-interval: "1h"

schedule.max-snapshot-count: 3

schedule.max-pending-peer-count: 16

schedule.max-store-down-time: "30m"

schedule.leader-schedule-limit: 4

schedule.region-schedule-limit: 2048

schedule.replica-schedule-limit: 64

schedule.merge-schedule-limit: 8

schedule.hot-region-schedule-limit: 4

replication.location-labels: ["zone", "host"]

pd_servers:

- host: 10.5.4.2

name: "pd-1"

client_port: 2479

peer_port: 2480

deploy_dir: "/data2/tidb-deploy/pd-2479"

data_dir: "/data2/tidb-data/pd-2479"

log_dir: "/data2/tidb-deploy/pd-2479/log"

- host: 10.5.4.4

name: "pd-2"

client_port: 2479

peer_port: 2480

deploy_dir: "/data2/tidb-deploy/pd-2479"

data_dir: "/data2/tidb-data/pd-2479"

log_dir: "/data2/tidb-deploy/pd-2479/log"

- host: 10.5.4.5

name: "pd-3"

client_port: 2479

peer_port: 2480

deploy_dir: "/data2/tidb-deploy/pd-2479"

data_dir: "/data2/tidb-data/pd-2479"

log_dir: "/data2/tidb-deploy/pd-2479/log"

tikv_servers:

- host: 10.5.4.4

port: 20160

status_port: 20180

deploy_dir: "/data2/tidb-deploy/tikv-20160"

data_dir: "/data2/tidb-data/tikv-20160"

log_dir: "/data2/tidb-deploy/tikv-20160/log"

- host: 10.5.4.5

port: 20161

status_port: 20181

deploy_dir: "/data2/tidb-deploy/tikv-20161"

data_dir: "/data2/tidb-data/tikv-20161"

log_dir: "/data2/tidb-deploy/tikv-20161/log"

- host: 10.5.4.2

port: 20161

status_port: 20183

deploy_dir: "/data2/tidb-deploy/tikv-20161"

data_dir: "/data2/tidb-data/tikv-20161"

log_dir: "/data2/tidb-deploy/tikv-20161/log"

tidb_dashboard_servers:

- host: 10.5.4.2

port: 8080

deploy_dir: "/data2/tidb-deploy/tidb-dashboard-8080"

data_dir: "/data2/tidb-data/tidb-dashboard-8080"

log_dir: "/data2/tidb-deploy/tidb-dashboard-8080/log"

numa_node: "0"

monitoring_servers:

- host: 10.5.4.2

deploy_dir: "/data2/tidb-deploy/prometheus-8249"

data_dir: "/data2/tidb-data/prometheus-8249"

log_dir: "/data2/tidb-deploy/prometheus-8249/log"

grafana_servers:

- host: 10.5.4.5

port: 8089

TiKV v4.0.2

# TiKV v4.0.2

global:

user: "tidb"

ssh_port: 32200

deploy_dir: "/data2/tidb-deploy"

data_dir: "/data2/tidb-data"

#listen_host: 0.0.0.0

arch: "amd64"

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

server_configs:

tidb:

store: tikv

log.slow-threshold: 300

binlog.enable: false

binlog.ignore-error: false

log.slow-query-file: "/data2/logs/tidb-slow.log"

performance.stats-lease: "60s"

tikv:

log-level: "info"

log-file: "/data2/tidb-deploy/tikv-20161/log/tikv.log"

log-rotation-timespan: "24h"

server.labels: { zone: "test", host: "10.5.4.2" }

server.grpc-concurrency: 8

server.grpc-raft-conn-num: 20

storage.scheduler-worker-pool-size: 12

storage.block-cache.capacity: "15GB"

raftstore.sync-log: true

raftstore.split-region-check-tick-interval: "30s"

raftstore.raft-max-inflight-msgs: 512

raftstore.apply-pool-size: 4

raftstore.store-pool-size: 6

raftstore.raftdb-path: "/data3/tidb-data/tikv/db"

raftstore.wal-dir: "/data3/tidb-data/tikv/wal"

rocksdb.max-background-jobs: 12

rocksdb.compaction-readahead-size: "4MB"

rocksdb.defaultcf.block-size: "4KB"

rocksdb.defaultcf.target-file-size-base: "32MB"

rocksdb.writecf.target-file-size-base: "32MB"

rocksdb.lockcf.target-file-size-base: "32MB"

raftdb.compaction-readahead-size: "4MB"

raftdb.defaultcf.block-size: "64KB"

raftdb.defaultcf.target-file-size-base: "32MB"

readpool.storage.use-unified-pool: false

readpool.coprocessor.use-unified-pool: true

readpool.storage.high-concurrency: 16

readpool.storage.normal-concurrency: 16

readpool.storage.low-concurrency: 16

pd:

log.max-days: 1

schedule.max-merge-region-size: 20

schedule.max-merge-region-keys: 200000

schedule.split-merge-interval: "1h"

schedule.max-snapshot-count: 3

schedule.max-pending-peer-count: 16

schedule.max-store-down-time: "30m"

schedule.leader-schedule-limit: 4

schedule.region-schedule-limit: 2048

schedule.replica-schedule-limit: 64

schedule.merge-schedule-limit: 8

schedule.hot-region-schedule-limit: 4

replication.location-labels: ["zone", "host"]

pd_servers:

- host: 10.5.4.2

name: "pd-1"

client_port: 2479

peer_port: 2480

deploy_dir: "/data2/tidb-deploy/pd-2479"

data_dir: "/data2/tidb-data/pd-2479"

log_dir: "/data2/tidb-deploy/pd-2479/log"

- host: 10.5.4.4

name: "pd-2"

client_port: 2479

peer_port: 2480

deploy_dir: "/data2/tidb-deploy/pd-2479"

data_dir: "/data2/tidb-data/pd-2479"

log_dir: "/data2/tidb-deploy/pd-2479/log"

- host: 10.5.4.5

name: "pd-3"

client_port: 2479

peer_port: 2480

deploy_dir: "/data2/tidb-deploy/pd-2479"

data_dir: "/data2/tidb-data/pd-2479"

log_dir: "/data2/tidb-deploy/pd-2479/log"

tikv_servers:

- host: 10.5.4.4

port: 20160

status_port: 20180

deploy_dir: "/data2/tidb-deploy/tikv-20160"

data_dir: "/data2/tidb-data/tikv-20160"

log_dir: "/data2/tidb-deploy/tikv-20160/log"

- host: 10.5.4.5

port: 20161

status_port: 20181

deploy_dir: "/data2/tidb-deploy/tikv-20161"

data_dir: "/data2/tidb-data/tikv-20161"

log_dir: "/data2/tidb-deploy/tikv-20161/log"

- host: 10.5.4.2

port: 20161

status_port: 20183

deploy_dir: "/data2/tidb-deploy/tikv-20161"

data_dir: "/data2/tidb-data/tikv-20161"

log_dir: "/data2/tidb-deploy/tikv-20161/log"

monitoring_servers:

- host: 10.5.4.2

deploy_dir: "/data2/tidb-deploy/prometheus-8249"

data_dir: "/data2/tidb-data/prometheus-8249"

log_dir: "/data2/tidb-deploy/prometheus-8249/log"

grafana_servers:

- host: 10.5.4.5

port: 8089

Test Plan

Refer to the test plan provided by TiDB official: TiKV | Benchmark Instructions

Use the tool GitHub - pingcap/go-ycsb: A Go port of Yahoo! Cloud Serving Benchmark (YCSB) for testing. Import 500 million KV data. The test script is as follows:

#!/bin/bash

echo "Benchmarking TiDB"

# Import test data

go-ycsb load tikv -P ../workloads/workloada -p tikv.pd="10.5.4.2:2479,10.5.4.4:2479,10.5.4.5:2479" -p tikv.type="raw" -p recordcount=524288000 -p operationcount=30000000 -p threadcount=128 > load_workloada.log 2>&1

echo "load workloada done"

sleep 2m

# Random write

go-ycsb run tikv -P ../workloads/workload_random_write -p tikv.pd="10.5.4.2:2479,10.5.4.4:2479,10.5.4.5:2479" -p tikv.type="raw" -p recordcount=524288000 -p operationcount=30000000 -p threadcount=128 > workload_random_write.log 2>&1

echo "run workload_random_write done"

The configuration for workload_random_write is as follows:

# workload_random_write

recordcount=1000

operationcount=1000

workload=core

readallfields=true

readproportion=0

updateproportion=1

scanproportion=0

insertproportion=0

requestdistribution=uniform

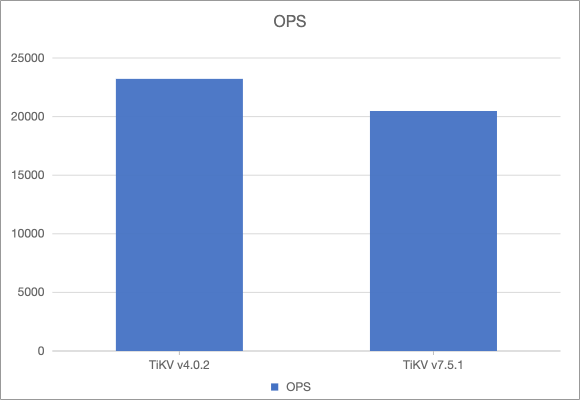

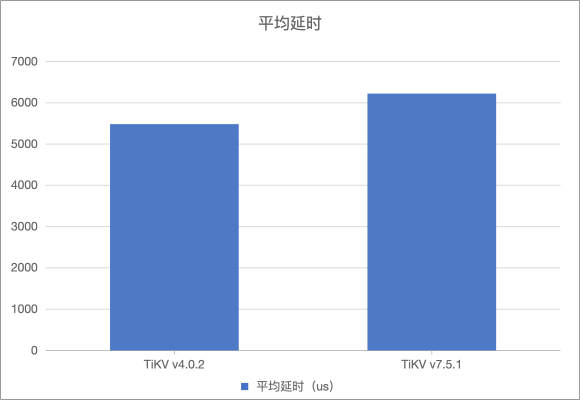

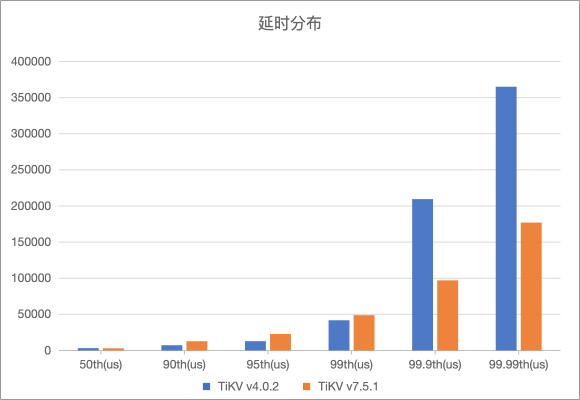

Test Results

TiKV v4.0.2:

TiKV v7.5.1:

Comparison of results:

Although the latency of v7.5.1 is only half of that of v4.0.2 in terms of latency distribution, the OPS is lower. I tried increasing the pressure testing machine, but there was no significant effect.

Is it a configuration issue?

The attachment contains a detailed test report. Can someone help take a look?

Tikv v7.5.1 Performance Test Report.docx (16.5 MB)