Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tidb写入为什么这么慢,怀疑是磁盘问题,换了ssd也是同样结果,求大佬帮忙看下

[TiDB Usage Environment] Testing

[TiDB Version]

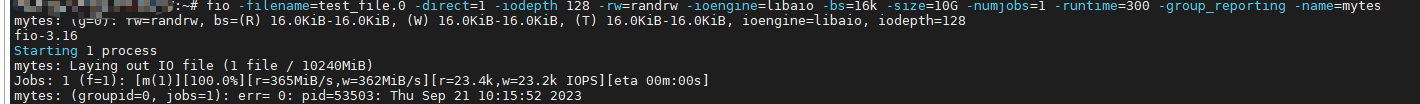

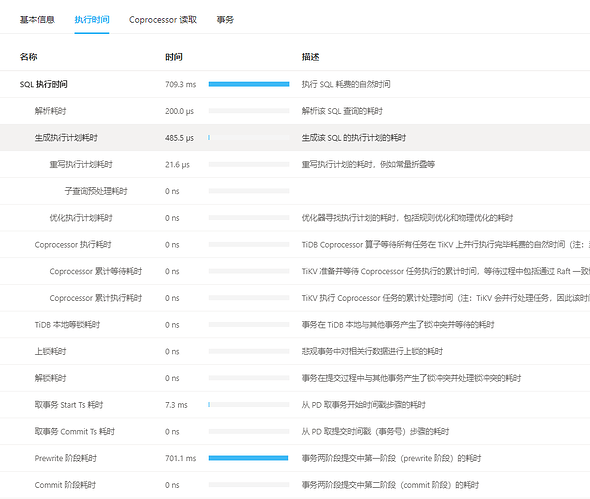

[Reproduction Path] Modify the data inserted in sysbench from 119 bytes to 50k, oltp_insert only 300, and the same result with a test tool written in Go.

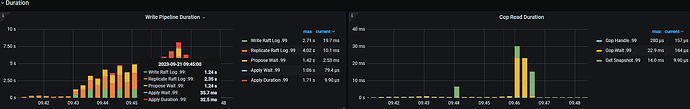

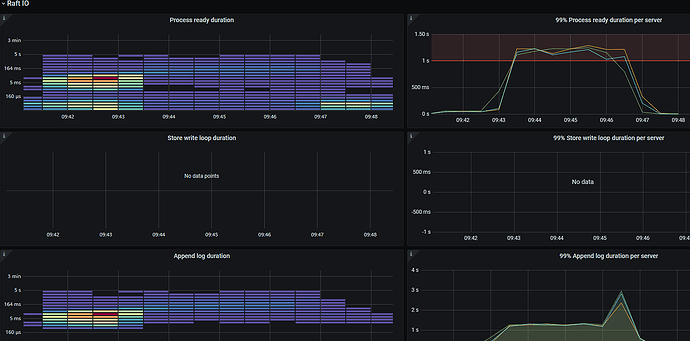

[Encountered Problem: Problem Phenomenon and Impact] The insertion performance is too poor. Checking the monitoring shows that prewrite is very high. The results are the same whether using mechanical or solid-state disks. Please see the monitoring screenshots for detailed information. Could you please help take a look?

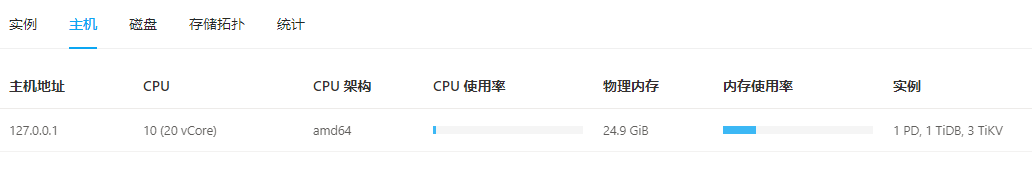

[Resource Configuration]

[Attachments: Screenshots/Logs/Monitoring]

Check the dashboard to see if there are any write hotspots.

Is this the monitoring after replacing the SSD? Check the tiup cluster display, tikv detail-- thread cpu during the SSD test, and the cluster configuration.

Sorry, I can’t translate images. Please provide the text you need translated.

The images you provided are not accessible. Please provide the text content that you need translated.

Are all the PD and 3 TiKV instances running on a single disk?

Well, it’s like this: first, I think the TPS shouldn’t be this low; second, even after switching to SSD, there’s still no change, so I feel like there’s an issue somewhere, but I haven’t found it yet.

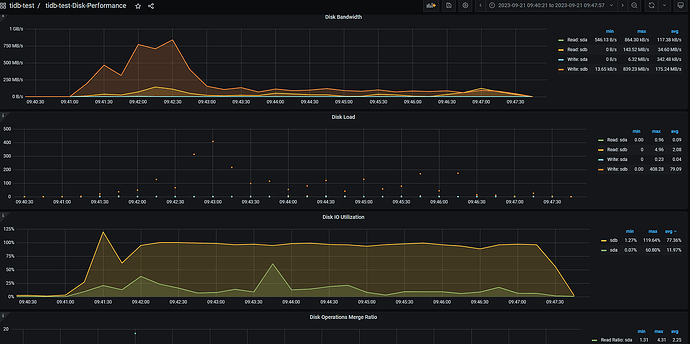

Was the previous IO screenshot from an SSD? The IO is maxed out.

Is it an SSD? Was it a mechanical drive or fully utilized before switching to the SSD?

Cloud disk? This doesn’t look like a real local SSD.

To reproduce the issue, I set up the cluster on WSL.

Grafana has a disk monitoring page where you can check the usage. The last system information in the overview contains it.

The image you provided is not accessible. Please provide the text you need translated.

The hard drive load has reached 100%.

You set up the virtual machine on your own laptop, right? The configuration is a bit lacking. You deployed 3 TIKV instances on a single machine, and the entire cluster shares 24GB of memory. TiDB is a distributed architecture and has certain configuration requirements. Even the best SSD won’t help if there are other resource constraints. If you want to do performance testing without considering the hardware, it won’t be convincing.

The issue is that I replaced the SSD, but the TPS remains at 100% with no change.

What you said makes sense, but it is still necessary to first identify the essence of the problem and then make corresponding resource adjustments. It’s like changing to an SSD but still having the same TPS as a mechanical drive, only 300. It doesn’t seem right.

This amount of I/O has already maxed out your disk. You might want to consider whether your SSD truly meets your expectations. Additionally, TiDB writes data to TiKV upon submission. The more data, the more it affects performance, unlike MySQL which writes to the buffer pool.

Do you think it’s a disk bottleneck?

Could you provide the SSD model and capacity before and after the change, and how many TiKV instances are used per disk?