Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 为何非分区表在修改分区时报错

【TiDB Usage Environment】Production Environment

【TiDB Version】V4.0.8

【Reproduction Path】

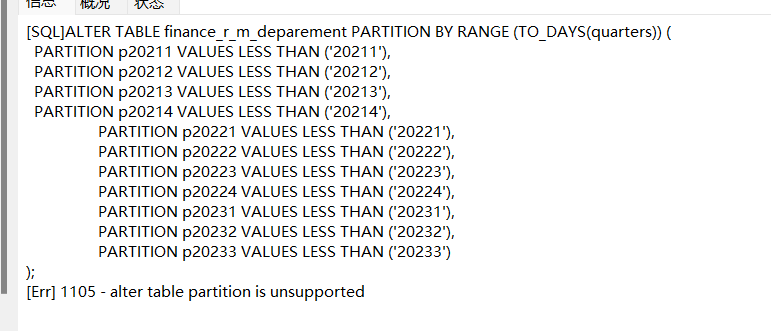

ALTER TABLE finance_r_m_deparement PARTITION BY RANGE (TO_DAYS(quarters)) (

PARTITION p20211 VALUES LESS THAN (‘20211’),

PARTITION p20212 VALUES LESS THAN (‘20212’),

PARTITION p20213 VALUES LESS THAN (‘20213’),

PARTITION p20214 VALUES LESS THAN (‘20214’),

PARTITION p20221 VALUES LESS THAN (‘20221’),

PARTITION p20222 VALUES LESS THAN (‘20222’),

PARTITION p20223 VALUES LESS THAN (‘20223’),

PARTITION p20224 VALUES LESS THAN (‘20224’),

PARTITION p20231 VALUES LESS THAN (‘20231’),

PARTITION p20232 VALUES LESS THAN (‘20232’),

PARTITION p20233 VALUES LESS THAN (‘20233’)

);

【Encountered Problem: Problem Phenomenon and Impact】

There is currently a large table with 30 million rows of data, and I want to partition it by time. The result is as shown in the figure below.

【Attachment: Screenshot/Log/Monitoring】

Online conversion from a regular table to a partitioned table is not supported.

So is there an efficient way to modify such tables into partitioned tables?

Creating a new partitioned table and exporting/importing should be the fastest.

3000kw of data is not much. Create a partitioned table and then batch insert in select.

As mentioned by h5n1 above, TiDB does not support modifying an existing table to a partitioned table. Therefore, to convert an existing table with data (assuming the table name is T) to a partitioned table, you can currently only do the following:

- Create a new partitioned table T_tmp, with the same structure as table T except for the partition information.

- This step is just a table creation statement and is very quick.

- Import the data from table T into T_tmp.

- Since this step involves a large amount of data migration, it is not recommended to use insert select from (large transaction, too slow).

- You can consider using more efficient and convenient non-transactional DML (非事务 DML 语句 | PingCAP 文档中心), but non-transactional DML enhances performance in batch data processing scenarios at the cost of sacrificing the atomicity and isolation of transactions.

- It is recommended to use the official dumpling + lightning tools for export and import.

- If you have Flink real-time stream synchronization tools, using these third-party tools is also a good choice. We usually use this method, which handles transactions and has high performance (QPS reaches tens of thousands).

- Verify the data consistency between table T_tmp and table T.

- A simple and straightforward way is to directly compare the total number of rows on both sides and ensure the sampled content is the same.

- If you need to check each row of data, you can use the sync-diff-inspector tool to verify the consistency of upstream and downstream data at a certain point in time.

- Drop table T and rename T_tmp to T.

At this point, the conversion from a regular table to a partitioned table is complete.

Therefore, it is necessary to evaluate whether to create a partitioned table at the initial stage of business deployment. If so, the assessment and decision must be completed before creating the table, and the partitioned table should be created in advance. Otherwise, handling it later will be more troublesome, especially when the data volume scales up.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.