Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: region丢失的副本会在其他节点自动重建吗

[TiDB Usage Environment] Production / Testing / PoC

[TiDB Version]

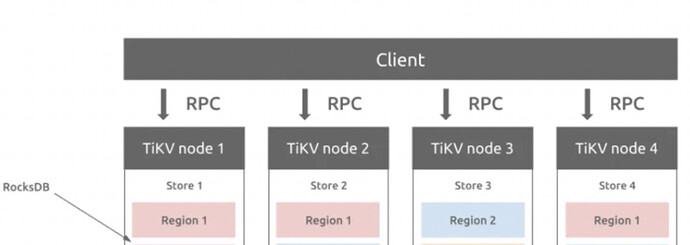

Assuming there are 4 TIKV nodes. Each region has 3 replicas.

If TIKV node2 shuts down at 10:00, all regions on node2 will become invisible. Will these regions be automatically rebuilt on other nodes?

For example, if region1 on node2 becomes invisible, will a new one be automatically rebuilt on node3?

Assuming node2 restarts 10 minutes later (at 10:10), how will all the regions on node2 be handled at that time?

In a MySQL MGR cluster, if a node is lost, it can be rejoined through binlog incremental recovery or full cloning.

I wonder what the mechanism is for TiDB in this regard?

It’s not that intelligent, right?

For this issue, first, you need to check how many replicas you have configured for your setup. In your scenario:

- If you have configured 4 replicas, then with only three TiKV nodes remaining, scheduling is not possible.

- If you have configured 3 replicas, then the PD layer’s inspection will detect that this region lacks a healthy replica and will add one.

In this scenario with 3 replicas, will PD automatically create them on other nodes?

If they are automatically created and node2 comes back to life, what happens then?

Mark, good question, waiting for the expert’s answer.

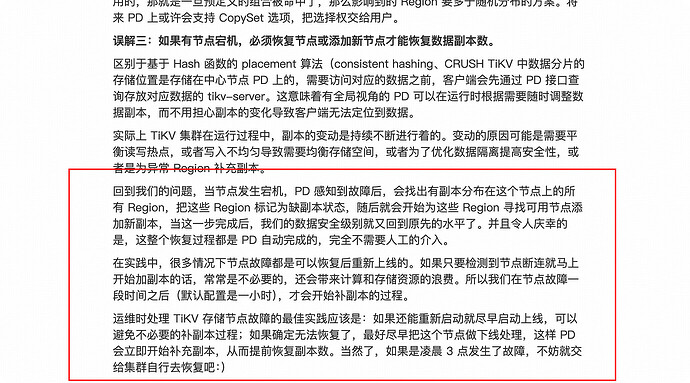

When there is no manual intervention, the default behavior of PD in handling TiKV node failures is to wait for half an hour (adjustable via the max-store-down-time configuration), then mark the node as Down, and start replenishing replicas for the affected Regions.

In practice, if it can be determined that the node failure is irrecoverable, it is advisable to immediately decommission the node. This allows PD to replenish the replicas as soon as possible, reducing the risk of data loss. Conversely, if it is determined that the node can be recovered but not within half an hour, the max-store-down-time can be temporarily adjusted to a larger value. This prevents unnecessary replica replenishment after the timeout, avoiding resource wastage.

Since version v5.2.0, TiKV has introduced a slow node detection mechanism. By sampling requests within TiKV, a score ranging from 1 to 100 is calculated. When the score is greater than or equal to 80, the TiKV node is marked as slow. You can add the evict-slow-store-scheduler to detect and schedule slow nodes accordingly. When it is detected that there is exactly one slow TiKV node and its slow score reaches the threshold (default 80), the Leaders on that node will be evicted (similar to the function of evict-leader-scheduler).

Yes, PD will automatically replenish replicas after a period of time, controlled by max-store-down-time. After a while, when node2 comes back to life, it will find that it is already an outdated version and will delete its own region.

I always thought it would only provide normal services and wouldn’t automatically replicate unless there was manual intervention.

I always thought it would only provide normal services and wouldn’t automatically replicate unless there was manual intervention.

Thank you for the explanation, expert.

Got it, I hadn’t really looked into the max-store-down-time parameter before.