Hey TiDB folks,

How can I configure TiDB on Kubernetes so that stale reads within a zone don’t generate cross-zone traffic?

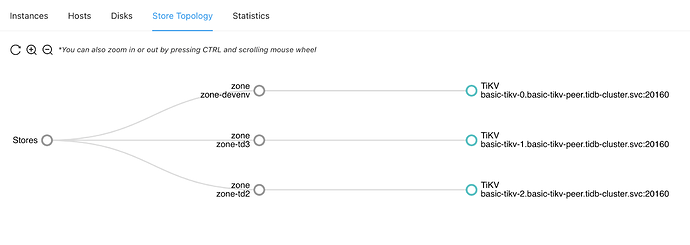

For example, my test K8s cluster has 3 nodes, each in a physically separate region.

In each node I’m running one PD, one KV, and one DB instance.

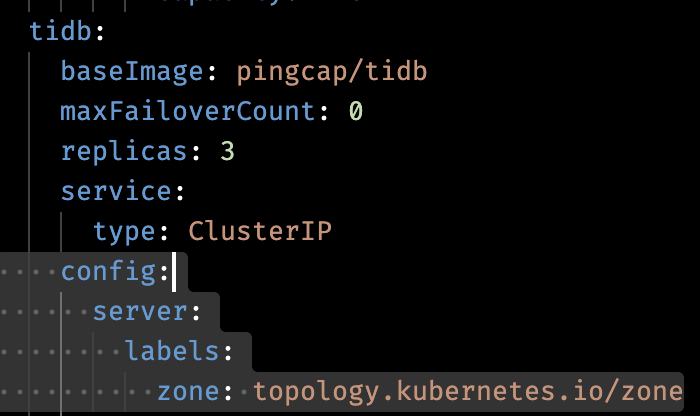

Here’s the K8s TidbCluster config.

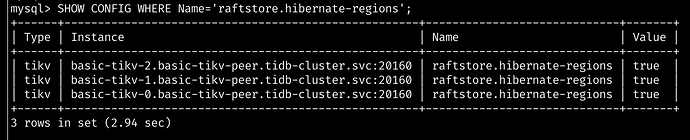

Note I’m using location-labels and isolation-level to try to hint to TiDB what the network topology is.

Of course, I’ve also labeled the nodes:

> kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

devenv Ready <none> 125m v1.26.2+k0s beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=devenv,kubernetes.io/os=linux,topology.kubernetes.io/zone=zone-devenv

td2 Ready <none> 125m v1.26.2+k0s beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=td2,kubernetes.io/os=linux,topology.kubernetes.io/zone=zone-td2

td3 Ready <none> 125m v1.26.2+k0s beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=td3,kubernetes.io/os=linux,topology.kubernetes.io/zone=zone-td3

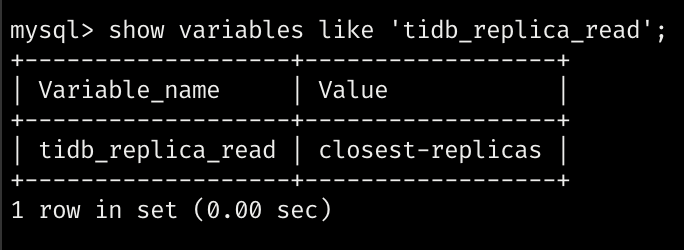

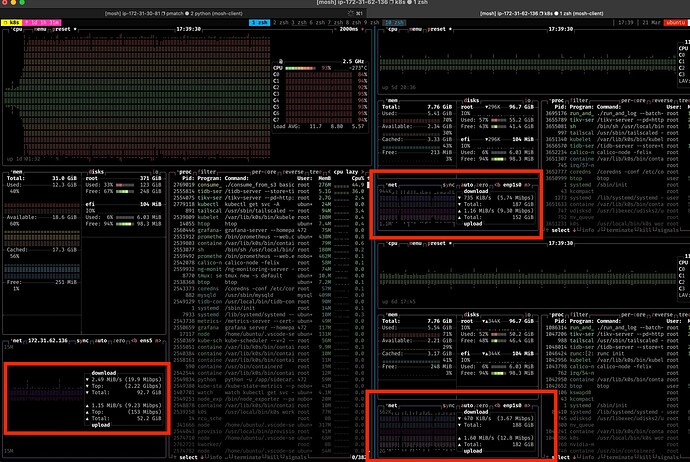

Unfortunately, even when I do pure stale reads* from a zone to the DB server in the same zone, I’m seeing cross-zone traffic.

It appears as though the DB server is randomly assigning reads to KV servers, regardless of where they are.

What I expected to happen is for the DB to say “oh this is stale so my local KV can service it” and then send it to the local KV.

Thanks!

*My stale reads look like SELECT sk FROM sk AS of timestamp tidb_bounded_staleness (NOW () - INTERVAL 60 SECOND, NOW ()) WHERE pkh4 IN (...)